XGBoost: the Algorithm

Machine learning Gradient boostingXGBoost (eXtreme Gradient Boosting) is a machine learning tool that achieves high prediction accuracies and computation efficiency. Since its initial release in 2014, it has gained huge popularity among academia and industry, becoming one of the most cited machine learning library (7k+ paper citation and 20k stars on GitHub).

I was first introduced to XGBoost by one of my good friends back in 2017, the same time as I started to work on some Kaggle competitions. XGBoost quickly became my go-to approach as often times I could achieve reasonable performance without much hyperparameter tuning. In fact, XGBoost is the winningest method in Kaggle and KDDCup competitions during 2015, outperforming the second most popular method neural nets [1].

Earlier this year, I had a chance to write decision trees and random forests from near scratch. It was amazing that the math behind tree-based methods is so neat and "simple", yet the performance could be very good. I was able to go for an extra mile, managing to build a simple implementation of XGBoost. To do so, I had to study the original paper of XGBoost in depth (I probably only touched 30% of the details). I feel it is a good time to revisit what I have learned during the process.

Note that various tree boosting methods exist way before the invention of XGBoost. The major contribution of XGBoost is to propose an efficient parallel tree learning algorithm, and system optimizations, which combined together, resulting in a scalable end-to-end tree boosting system. The making of XGBoost not only requires understanding in decision trees, boosting, and regularization but also demands knowledge in computer science such as high-performance computing and distributed systems.

I will go over the basic mathematics behind the tree boosting algorithm in XGBoost. Most of the materials are referenced directly from the XGBoost paper. I'm writing this blog to strengthen and further my understanding of XGBoost, and at the same time to share my view of the algorithm.

Decision trees and gradient tree boosting

We will follow the notation from the paper. Consider a dataset with examples and features . Let denote the space of regression trees, where is the number of leaves in the tree, represents a possible structures of the tree that maps to the corresponding leaf index, is the leaf weight given the observation and a tree structure . A key step of any decision tree algorithms is to determine the best structure by continuously splitting the leaves (binary partition) according to certain criterion, such as sum of squared errors for regression trees, gini index or cross-entropy for classification trees. Finding the best split is also the key algorithm for XGBoost. In fact, all algorithms listed in the paper are related to split finding. The resulting split points will partition the input space into disjoint regions as represented by leaf . A constant is then assigned to leaf , that is Thus can also be expressed as which is equivalent to .

Now we can represent a tree ensemble model as a sum of additive tree functions: where each corresponds to an independent tree structure and leaf weight . The optimal tree structures and weights are then learned by minimizing the following object. where is a regularization term to penalize complex models with exceedingly large number of leaves and fluctuant weights to avoid over-fitting.

The boosting tree model can be trained in a forward stagewise manner [2]. Let denote the prediction of instance at the -th iteration, by and , one needs to solve to minimize the following: It is quit straightforward to calculate the given the regions . On the contrary, finding the best which minimize could be a very difficult task for general loss functions. To find an approximate yet reasonably good solution, we first need to find a more convenient ("easy") criterion. Here is where numerical optimization via gradient boosting comes into play. A second-order approximation of loss function was used to show that the AdaBoost algorithm is equivalent to a forward stagewise additive modeling procedure with exponential loss [3]. The XGBoost paper borrowed this idea to approximate the objective by where and are first and second order gradient, and the constant term is omitted.

Given or equivalently , from , the paper shows the optimal weight in can be expressed as and the corresponding optimal value is

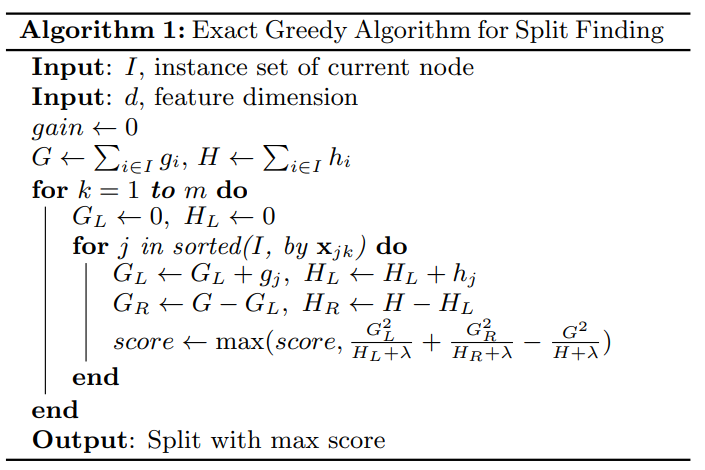

Another difficulty for finding the optimal tree structure is that the possible ways of partition the input space is numerous. Hence, the second approximation is to use a greedy algorithm that starts from a single leaf and iteratively adds branches to the tree. This is a very common approach same as the recursive binary partitions adopted in CART [2]. Let , can be decomposed as which means the splitting can be processed independently across all leaves (parallelism!). Consider and as the disjoint left and right regions after splitting region . Then the loss reduction after the split is given by The best split point will then be the one resulting in the largest value of . An exact greedy algorithm of finding the split point is given as follows.

Besides the regularized objective mentioned in , two additional techniques are used to further prevent overfitting. The first technique is to shrink the weight update in by a factor , oftentimes also called a learning rate. (Recall the benefits of shrinkage methods such as ridge regression or Lasso.) The second technique is column (feature) subsampling, a technique used in Random Forest to improve the variance reduction of bagging by reducing the correlation between the trees.

comparison

Notice the XGBoost algorithm is different from the Gradient Tree Boosting Algorithm MART [4] showed in the ESL book. The MART is also used in the package [2] besides Adaboost. Let's take a closer look at the weight update equation . Define as the instance set of leaf and as the cardinality of . Suppose we fix the and ignore the regularization term, we can express as The nominator and denominator can be considered as the estimated first and second-order gradient over with respect to , the latest (accumulated) leaf weight. Does this formulation look familiar? It resembles Newton's method in convex optimization where the update is subtracting the first-order gradient divided by the Hessian matrix (like the one used for solving logistic regression but in a non-parametric fashion). The MART method is using the steepest gradient descent with only first-order approximation. As a result, we can expect XGBoost has a better quadratic approximation and have a better convergence rate to find the optimal weight (in theory).

An example with logistic regression for binary classification

XGBoost can be used to fit various types of learning objectives including linear regression, generalized linear models, cox regression, and pairwise ranking [5]. Here we will briefly discuss how to derive the weight update in for logistic regression.

Let denote the binary response 0 or 1 for the th observation . Let denote the accumulated weight for after the th iteration, that is where is the update given in with . We will hide the superscript of from now on. By the logit link function, we have as probability estimate for .

For logistic regression, the "coefficients" are estimated based on maximum likelihood. More specifically, we will choose to minimize the negative log-likelihood function in as The first and second order gradients immediately follows:

We can then use the exact greedy algorithm to find all splitting points and partition the input space into s. Finally, calculate the corresponding based on the gradient information derived above.

Conclusion

In this post, we briefly go over the key algorithm in XGBoost to find the splitting points in boosting trees. The idea is behind quadratic approximation on the loss function, and the resulting algorithm and weight update are intuitive and straightforward. Without a doubt, the exact greedy algorithm discussed here alone is not good enough for a system with "eXtreme" in its name. What happens when the data is too large to be fit into the memory? Can we scale the algorithm using parallel and distributed computing? How do we efficiently store and process a sparse dataset? All the answers can be found in the second half of the paper with more implementation details in the Author's presentations and of course, the Xgboost repository on GitHub. Although some of the related system and computation topics are beyond my current knowledge, it is definitely worth trying to understand the basic tools and ideas. I hope I could make a follow-up post to discuss high-performance computing concepts used in XGBoost when I feel more confident in the subject in the near future.

References

[1] T. Chen and C. Guestrin. XGBoost: A Scalable Tree Boosting System. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pages 785-794, 2016.

[2] T. Hastie, R. Tibshirani, and J. Friedman. The elements of statistical learning: data mining, inference, and prediction. 2nd ed. New York: Springer.

[3] J. Friedman, T. Hastie, and R. Tibshirani. Additive logistic regression: a statistical view of boosting. Annals of Statistics, pages 337-407, 2000.

[4] J. Friedman. Greedy function approximation: A gradient boosting machine. Annals of Statistics, pages 1189-1232, 2001.